Did you see all the fuss about the recent Amelia Earhart documentary on the History Channel? A picture, found in the National Archives, purportedly shows Earhart and her navigator, Fred Noonan, sitting on a dock at Jaluit Atoll in the Marshall Islands with their plane sitting on a barge in the distance. This photo, which would have been taken after the crash, is used in the documentary to argue that the two survived the crash, were captured by the Japanese, imprisoned by them while the US Government performed a cover-up, and eventually they died or were killed in prison.

This picture and the story was investigated with the latest scientific techniques by a former Assistant Director of the FBI. The latest in facial and body recognition techniques were used to positively identify both Earhart and Noonan, and the photograph itself was authenticated as having been unaltered with impressive forensic techniques. Even the airplane spotted in the background is an exact physical match for the Lockheed Model 10 Electra that Earhart was flying.

The documentary is very convincing and brings to bear the latest scientific evidence and investigative methods by leading experts to show what might have really happened to Earhart, and the newly discovered photograph is the key that has unlocked the mystery. Every major news network and US newspaper has covered this exciting new development and the implications of the bombshell new finding.

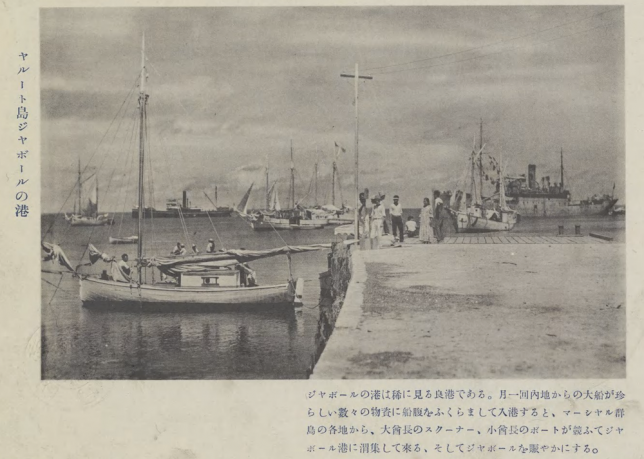

There’s just one problem: it isn’t true; the photo has nothing to do with Earhart, Noonan, or their plane, and it was already in print at least two years before they disappeared in 1937. It took Kota Yamano less than thirty minutes to find the source of the photograph online (yet somehow the highly-trained experts and former FBI agents investigating it for months fell for the fake photo). Here is the photograph in a Japanese travel book published in 1935:

So what lessons can we learn from this ridiculous mistake, other than that the History Channel stopped being a source of quality programming a long time ago?

Experts are wrong all the time. The parade of world-class experts who opined about the photograph were all brazenly wrong; as experts, they have too much faith in their techniques and too little skepticism. Experts disagree with one another all the time in every field, so it follows than most experts are wrong most of the time about most things. An expert is only as good as the evidence he cites, and you should never take the word of an expert; that is a logical fallacy called an appeal to authority. Assess the evidence they present and assess the evidence independently; it is the evidence that matters. The Japanese blogger who showed that the photo was a fake doesn’t have any of the “expertise” claimed by the men in the documentary, but he was right and they were wrong. Don’t over-value scientific studies because they were performed at a leading university or by a leading personality; assess the evidence independently.

Reporters who cover stories like this are more interested in ratings and hype than in fact checking and counter narratives. Dozens of reporters did feature pieces on this Earhart story, mostly just repeating the PR talking-points provided to them by the History Channel. Not one of them spent even a few minutes fact-checking the key element of the story. The reporters who wrote about it did not have the knowledge-base or the desire to investigate the claims made by the filmmakers. When news organizations report on scientific and health publications, they do no better. Critical analysis is almost never a part of the coverage, and what gets covered is usually selected for it ability to attract clicks and readers; this means that reporters are happy to report on wild and outlandish health claims and studies (“low probability hypotheses”) because they fit the “man bites dog” mold, even though it’s usually the dog who bites the man.

Improbable hypotheses require incontrovertible evidence to validate them. Bayes’ theorem teaches us clearly that if you are going to accept some claim as likely true, it either needs to have a very high degree of pretest probability or very firm and sound evidence that supports it (ideally you would have both). The claim that Earhart and Noonan were in that particular photograph was a low-probability hypothesis, so the low-quality “evidence” presented in the documentary that it was them just simply wasn’t enough to matter. Most published claims in medical science are also low probability claims. Studies that report on things like the association between low levels of Vitamin D and treatment failure in Nepalese children with pneumonia (published this week in Nature) are so unlikely to be meaningfully related (that is, the claim has a low pre-study probability) that it doesn’t matter if the authors found a “statistically-significant” finding in their data or not: it’s garbage. Remember, about 80% of the medical literature published this year will eventually be disproven; you’ll have a better idea of which ones are bunk if you learn to consider pre-study probability.

Just because the data points are explained by a narrative doesn’t mean that that narrative is the only explanation for the data points. The History Channel film makers very skillfully weaved together a plot that was seemingly supported by the “evidence.” Apart from the fact that the “evidence” was false, we must also consider that many other stories and narratives contain the same data points. There is always more than one explanation (or more than one hypothesis) that explains the data. The filmmakers already had their minds made up about which story was true so they framed all the data in that context; they just as skillfully could have “proven” several others stories with the same “facts.” Narrative is everything. They made what might be called a “circumstantial case” and any good attorney could have torn it apart; the equivalent of circumstantial evidence in medical literature is the logical fallacy of assuming that correlation equals causation. Almost every piece of published scientific, medical literature is really just reporting correlation between two variables, but the authors, editors, journalists, and readers of a given article go an unwarranted step further and assume causation. One way to stop doing this is to make it a habit of always trying to think of other explanations for the findings (or other hypotheses).

Dressing things up as novel and scientific doesn’t have anything to do with whether or not they are true. The rhetorical brilliance of the History Channel documentary was how it appealed to its viewers. Every generation assumes that they are smarter than the last (they aren’t), and viewers are always gullible for some new understanding that others never had before, bolstered by new science or new techniques, without actually understanding the validity of the new science or techniques. The truth is, the hundreds of people who contemporaneously searched for Amelia Earhart and investigated her disappearance stood a far better chance of finding some actual meaningful evidence than a former Assistant Director of the FBI 80 years after the fact. But the film makers take advantage of our instinct to assume that everyone else who ever investigated the case was either dumber than us or were involved in a conspiracy to hide the truth. They claimed both of these things regarding the Earhart disappearance, and these two claims are pretty standard among conmen today. But this happens in science and medical literature all the time as well. The reader of a medical journal is much more interested in and entertained by novel claims; few people enjoy reading studies that confirm what we already know. But Bayes’ theorem tells us that what we already know is far more likely to be true than some new discovery, in a general sense. Literature that re-examines what we already know (replication studies) are almost never performed and therefore the scientific process, which requires replication, is almost never fully executed. Instead, academic careers are made the same way this History Channel documentary was made: trying to claim novelty to grab attention, and then glam the audience with pseudoscience.

People find what they want to find and then stop looking. Why was Kota Yamano able to disprove the whole show in less than 30 minutes? Speaking Japanese helps, but, more importantly, he was skeptical and didn’t believe the central premise of the show, so he looked for evidence that it was wrong, even though he was disagreeing with the “expert consensus” presented in the program. Search-satisfying is a bias employed every day in medicine: search for an article or a source that agrees with you and then stop looking. When you do that, you are likely to miss all the evidence that disagrees with you. People rarely let “the facts speak for themselves;” rather, they search for facts that agree with what they already believe (and look no more). As a rule, try to look for evidence that disagrees with what you already believe, which brings us to the last point.

Don’t seek to prove your theory, seek to disprove it by every means possible. Ultimately, this is the basis of the scientific method and the reason why so much science, including medical science, is dead wrong. This is how we avoid confirmation bias. Lazy investigators try to prove their theories; good investigators try to disprove their theories. The filmmakers didn’t once ask an expert to disprove that the photograph was of Earhart and Noonan or entertain other theories (like it was taken two years before and published in a Japanese travel book available for anyone to find on the internet). In medical literature, this sort of laziness is almost universal. You should accept something as valid only after you have doggedly attempted, in every way you can imagine, to disprove it.