Here was a conversation in recent car ride:

Daughter: Penny has 5 children, 1st is January, 2nd kid February, 3rd is called March, 4th is April. What is the name of the 5th

Me (after considerable, don’t-want-to-be-shown-up-again thought): I don’t know. Tell me.

Daughter: What is the name of the 5th child.

Me: I don’t know! Tell me!

Daughter: WHAT is the name of the 5th child!

You’ve probably heard that one before. It’s amazing how hard this riddle is if you’ve never heard it, and how easy it is if you have. Of course, seeing it written down makes it very easy, since all the difference in the world rests with the use of either a period or a question mark at the end of the riddle. But let’s think about this riddle from a different perspective: the real difficulty is not with the question but with the biases and presumed limitations that the hearer of the riddle imposes.

- The hearer assumes that “What is the name of the 5th” is a question rather than a declaration even though the speaker doesn’t inflect the end of the sentence; the presence of the word ‘what’ is enough.

- This presumed limitation is augmented by the biased belief that no one would name her child “What.” If she had said, “May is the name of the fifth child” in the same inflection, then the problem wouldn’t have existed.

- Our minds look for patterns, so most people who hear it for the first time are looking for a pattern that fits. We are so good at looking for patterns that we often see them when they aren’t there (this is called apophenia and science is riddled with this problem; thinking that vaccines cause autism is an example of this psychological trick).

Interestingly, someone who speaks English poorly (as a second language) is more likely to get this riddle correct than a native English speaker; the English-as-a-second language speaker hasn’t had these heuristics drilled into his head and reinforced, so he is more “open minded” in solving the problem. Usually in life, these shortcuts that our brains make (and the assumptions that we make in turn) are a good thing and they keep us out of trouble. But very often they limit our problem-solving ability and force us into inescapable traps of wrong thinking.

Let me give you a more studied example. If you have seen this already, you won’t be impressed, but if you haven’t, read on. Below are nine dots arranged in a set of three rows; your challenge is to draw four straight lines which go through all of the dots without taking your pencil/finger off the paper/screen (try solve this before scrolling down).

This classic challenge dates back many decades – to at least 1914 – and has been popularized in the latter half of the 20th Century as a teaching tool for companies and think-tanks to teach creative thinking skills. It has also been studied by psychologists as early as 1930 to learn how people go about solving problems and how we can improve that process.

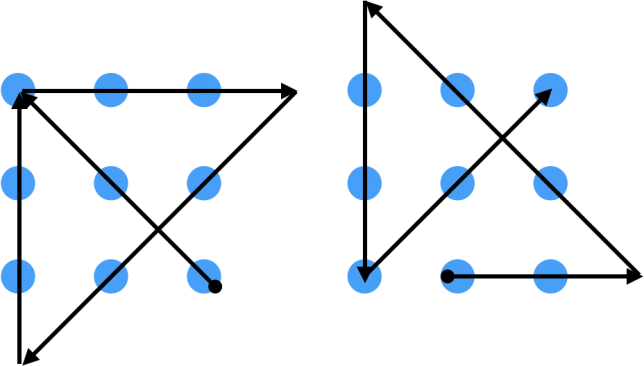

Did you solve the problem yet? If you didn’t, don’t worry. Most people can’t solve the problem even after racking their brains for several minutes. I’ll give you a hint (though, in fairness, it may not help you): this puzzle is likely the origin of the phrase “thinking outside the box.”

Did that help?

Here are some solutions:

Easy isn’t it? Why was it so hard to begin with?

- You assumed that your lines had to stay “inside the box” represented by the nine dots, even though that wasn’t a specific instruction.

- You limited yourself to search for solutions that fell within this self-imposed boundary.

All problem solving and creative thinking is hindered by these same mental impasses. Thinking outside the box is supposed to mean challenging the presumptions and assumptions that limit us. If you already knew the solution to the 9 dot problem, you might not feel the sense of weirdness that many do when they don’t know the solution. But for you advanced folks out there, try this: How can you draw only one straight line through all nine dots without lifting your pencil off the paper? It’s possible… I’ll tell you how at the end if you haven’t solved it yet.

First, let’s think about problem solving in general for a minute.

Most problems have an almost infinite number of solutions. If I lose my car key (hey, it happens!) and ask my daughter to help me find it, it is really helpful if she narrows down where to look. Is it anywhere in the world? Or just in the living room? This is called the problem space. A good question defines the problem space for the problem solver. For example, “Can you help my find my car key in the living room?” is a better defined question than “Can you help me find my car key?” Very often, most questions don’t present as well-defined, clearly delineated problems; we make assumptions about the problem space in order to narrow our search for possible solutions. When we do this, we are thinking inside a box and that usually works, until it doesn’t.

We usually narrow the problem space based upon past experiences. Why would the key be anywhere in the world? Past experience tells my daughter it’s probably in the house, and probably in the living room. If you’re playing chess, usually an early sacrifice of the queen is not a good move based upon past experiences, so possible plays that include that option are usually excluded. When a patient presents with abdominal pain, it has never been acute intermittent porphyria in your past experience, so you don’t bother searching there. Each of these are what is called a heuristic – that is, a trick or algorithm that doesn’t guarantee a perfect result but leads to a quick and usually correct result. The problem, though, is that sometimes the key is at the grocery store, the queen’s gambit does works, and a patient does have acute intermittent porphyria. The trick is balancing heuristic-driven shortcuts that narrow the search space and also arrive at the correct solution to the problem. In modern terms, this is the difference in System 1 thinking and System 2 thinking. The difficulty is knowing when to switch between these two systems, because both are good. If we considered every possibility to every question, we would never solve any problem.

Once narrowed, we have a hard time broadening our search space. Our brains assume there is a reason why we have narrowed the search space. They assume that we’ve already been down those roads and they were dead ends or they assume what we already know is correct and therefore any solution in conflict with our “correct” prior beliefs must be faulty.

When we do find solutions outside of our search space, we often call this a paradigm shift.

Here are some paradigm shifts:

- The germ theory. Prior to the germ theory of disease, it never occurred to us that washing hands in between patients might be important, for example. Patients were dying in front of us by our own actions, but the idea that we were causing it was ignored because it was incompatible with our existing beliefs. People like Ignaz Semmelweis were thought to be crazy when he challenged that prior belief. Once the shift was made, however, a whole new set of innovations followed rapidly: antiseptic and aseptic principles, rubber gloves, pasteurization, water sanitization, sewage processing, refrigeration, vaccinations, antibiotics, and a myriad of other innovations that were immediately obvious once we took the blinders off our eyes.

- The viral origin of cervical cancer. The idea that a virus might cause a cancer used to seem absurd, but with this shift we are now well on our way to preventing several cancers and have developed novel strategies for screening and treatment as well.

- Bacterial cause of stomach ulcers. This dramatic shift has happened in our lives; we used to blame stress and a myriad of other things as the cause of ulcers. Now we understand that bacteria are the cause and we treat and think of them very differently.

We limit ourselves more than any person or set of circumstances limit us. Though I’ve been talking about thinking, this is even true of doing. People used to think that no one could run a mile in under four minutes, so no one did. Then, a resident physician named Roger Bannister, who was a part time runner with a light training schedule, did it on May 6, 1954 (after he completed morning rounds at the hospital). Just like that, Bannister changed the paradigm. He expanded the search space. He showed that something was possible which people previously thought was impossible. Over 1400 men have run the mile in under four minutes since then.

The mind is key, even to physical accomplishments. Not too long ago, most people would have thought that human flight, space travel, moon visits, or iPhones were impossible. But human innovation is limitless if not artificially constricted by our presumptions.

If you think you can’t do something, you can’t.

Paradigm shifts happen when we think differently, and real innovation happens when we free our minds of past failures.

How can we think outside the parameters of our self-imposed search space? This is the tough bit. A lot of research has involved giving people “hints” to expand their thinking, but it’s hard to give a hint when we don’t know the right answer. We all know the answer to the 9 dot problem now, but what if we didn’t? How could we give a hint or get one? Worse, many times the only clue that we need to think differently is when we realize that we can’t come up with the answer; we call that an impasse. But most of time, as least in medicine, the problem isn’t that we have reached an impasse, it’s that we have reached the wrong answer and don’t know it! Our biases often lead us to the incorrect diagnosis rapidly, and no teacher is there to tell us that it is incorrect. How can we prevent this?

The traditional process is viewed like this (source):

As you can see, it takes repeated failure for us to seek representational change, meaning that we try to reframe the problem or challenge our assumptions. In medicine, we would rather prevent the whole ‘repeated failure’ step if possible. In fairness, though, we have many example of repeated failures before us and we still don’t seek representational change. What do I mean? Well, for example,

- We believe that around 80% of physician-patient encounters involve substandard care (not delivering the right diagnosis, right treatment, and/or not following evidence-based guidelines). This type of failure requires a fundamental change in the way we teach medicine, yet it’s not happening.

- We believe that 80% of published medical literature each year reaches conclusions that are false. This should invoke a representational change as well but momentum and inertia are powerful forces (hint: Bayes theorem is a possible solution but this requires a huge paradigm shift for most people).

- We have tried many different tocolytic drugs to stop preterm labor over the years and none have worked (representational change: labor isn’t correlated with the amount of uterine contractions – yes, that’s actually true).

- We have focused on cholesterol as an important cause of heart disease, but the lipid hypothesis has resulted in numerous costly dead ends (statins, for example).

I could go on. Far too often, we are not stimulated by repeated failure to step back and ask basic and challenging questions. The further we travel down a paradigm, the harder it is to shift away from (the sunken costs fallacy).

But what’s the answer to my question? How can we prevent diagnostic error in medicine to begin with? I don’t have all the answers, but it must start with changing how we take a patient’s history. Traditionally, students are taught to take a history and perform a physical exam first, then make a differential diagnosis. But the history and physical itself very often narrows the search space too much. Instead, make the differential diagnosis first, after hearing the chief complaint. Not only will this give a more appropriate search space, but it will make the quality of the history and physical better as they will serve as tools to to work through the differential diagnosis. Read more about that here.

Be careful of people who use data to prove something they already believe. To avoid biases (like the confirmation bias) that trick you into thinking you’ve found the solution when you haven’t, try deciding what evidence is appropriate to justify your belief before you go looking for the answer. What do I mean? Let’s say you want to decide who the greatest NFL quarterback of all time is. The best way to do that is to decide the criteria that must be satisfied before you start looking at the stats. Is it highest career rating? Aaron Rodgers. Most Super Bowl victories? Tom Brady. Most superbowl wins without a loss? Terry Bradshaw and Joe Montana. Most career yards? Peyton Manning. Etc. You can answer this question almost any way you like depending on how you define the outcome criteria.

You really must decide how you are going to define success before looking at the data; and this is a fundamental problem with science. Most scientists have already defined what they want to “prove” with the data, then the carve up the data or design the experiment to do exactly that. Believe vaccines cause autism? There are studies for you. Believe they don’t? There are studies for you, too. Think Sid Luckman is the greatest quarterback of all time? You can “prove” it if you define the outcome in the right way (most seasons leading the league in average passing yards). Define success before looking at the data.

Your preexisting beliefs bias how you view problems. Do you think that man-made global warming is the greatest crisis facing the world today? Are you in an echo chamber that constantly reverberates reducing CO2 emissions as the solution? The real solution may be outside that box. More than likely, the real solution will come from innovative solutions that harvest the CO2 in our atmosphere to make the energy of the future. We should be thankful for those scientists who didn’t view atmospheric CO2 as the problem, but as the solution to another problem! That’s an example of a paradigm shift and thinking outside the box. That’s where and how innovation happens.

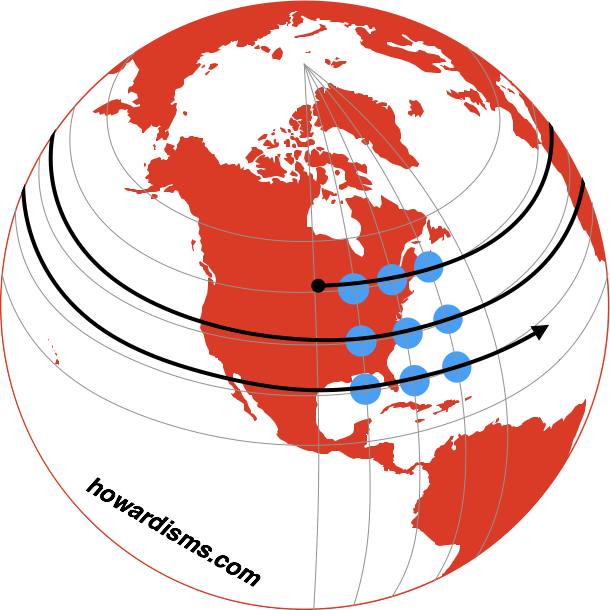

Here’s the answer to how to draw a single straight line through nine dots:

By the way, my 13 year old son offered this solution in under a minute, having never seen the problem before. It didn’t even seem to require effort. He also suggested using a very large pencil whose stroke would cover all 9 dots simultaneously, which was actually a fair answer given the parameters set by the question.

Does it seem your kids are sometimes smarter than you? Better with computers, for example, or non-Euclidean geometry? They aren’t smarter than you, they just haven’t had the past experiences that close your mind and anchor you to previous failures. Children are great problem solvers, not just because they haven’t been hemmed in by dogma but because they aren’t afraid of failure.

Free your mind. Stop being a slave to dogmas and false beliefs of the past. Get back to basic principles and question all of your assumptions and presumptions.

This doesn’t mean accepting willy-nilly every new, crazy idea that comes along. Don’t be a victim, either. It means demanding a high degree of evidence for your beliefs and questioning everything. It means routinely arguing against yourself and your beliefs. It means thinking outside the box.