The ability to read scientific literature critically is one of the foundational skills of physicians. The most common way (and perhaps the most wrong way) that physicians read literature is to read the abstract of the paper (and sometimes just the conclusion of the abstract of the paper) and get a “take-away” message, assuming that all of the other work and vetting has been done for them. Keep in mind, however, that for variety of reasons, most published conclusions in literature are untrue. Peer-review is an utter sham, in need of significant correction. So recognizing these two points, let’s look at one approach to analyzing a scientific paper.

Scientific papers are generally organized in the IMRD format: Introduction, Methods, Results, and Discussion.

Introduction

- What is the quality of the journal in which the article is published?

- Not all journals are created equal. Consider the quality of the journal that the article appears in and the quality of that journal’s peer-review and editorialship.

- Do the authors have any conflicts of interest or other biases?

- Do the authors have commercial bias, like working for a drug company or lab company, or as a plaintiff’s expert witness, which would incentivize them to be biased in their research? Bias also comes in other forms, like a need for publications, fame, or promotion of a pet theory.

- What question is being asked by the researchers?

- Rephrase the central point of research succinctly and clearly in your mind.

- What is the null hypothesis?

- This should be clearly defined and stated. All of the research design is built around a null hypothesis. Remember, we are seeking to disprove, not prove.

- What outcomes are of interest? What is the primary outcome being observed? What are the secondary outcomes?

- The primary outcomes are usually the only ones the study is adequately powered to assess; make sure you understand which outcomes are primary and which are secondary, and which were studied a priori and which were not. The necessary statistics change and the study design may need to be different for these different outcomes.

- What is already known about this topic?

- It is hard to know what confounders (and other important facts that may affect the design of the study) exist without first reviewing what is already known. A good paper will do this. A bad paper will skip over relevant, already published data. This knowledge is also necessary to assess the pre-study bias and odds.

- Do the stated alternative hypotheses make sense based on what we already know? What is the pre-study probability that the hypotheses are true?

- As stated above, even if the data in the papers turns out to be statistically significant, it still has a great chance of being false based on the pre-study likelihood of the hypothesis. Again, see here.

- Restate and summarize, in your own words, what is known about the topic and what the investigators are seeking to learn.

- Stating this in your mind will help you not be distracted by the clever writing.

Methods

- What would be the best way to design a study to answer the research question?

- If you were designing a study to answer the question, what would be the best way (e.g., a placebo-controlled, double-blinded, randomized controlled trial)? Are there reasons why the best type of design cannot be done (ethical, financial, time, etc)?

- How did the authors design the study?

- If they did not do the best type of study, there may be good reasons; but is the method used able to answer the questions asked, and with what degree of certainty?

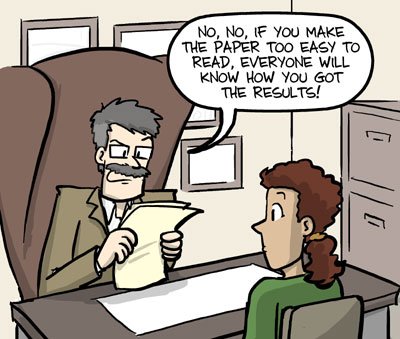

- howardism: When people designs studies, they often design the study in a way to show the effect they are expecting to find, rather than to disprove the effect (this is opposite of what should happen).

- What are the weaknesses of the study design?

- Poorly described or too narrow a patient population, poorly matched controls, unclear study endpoints, confounding issues, differences in measurement, recall bias, loss of patients to follow-up, etc. Read more here.

- What were the inclusion and exclusion criteria? Did these criteria make sense? How might the study have been affected by different choices?

- Choices about inclusion and exclusion criteria can have enormous impact on the outcome of the study. Review each one and decide if it made sense to include or exclude the group. Are there others the authors didn’t consider?

- What variables were held constant? Was there a control? Was the control appropriate?

- As with exclusion and inclusion criteria, control of variables is a huge factor. Do the variables controlled for make sense? Are there variables the authors didn’t consider?

- Does the study method address the most important sources of bias for the question?

- Biases come in many, many forms. The ideal study is free of bias (though no studies truly are). What did the authors do to negate bias? What biases did they not consider?

- Was a power analysis done? If not, why not?

- A power analysis is fundamental to assessing a trial for Type I and Type II errors. Claims of statistical significance have no meaning without a proper power analysis. If a power analysis was not done, why not? A study without a power analysis is a preliminary study. Remember, you can enroll too many patients in a study just as easily as you can enroll too few. If enrollment didn’t match the power analysis, was a a posteriori analysis done? If not, why not?

- Did the study follow the study design? Was there over-enrollment, under-enrollment, or other deviations from the protocol?

- The authors should account for deviations from the protocol; if they did not, think about how the outcomes might have been affected.

- Was the study IRB approved? Did the IRB controls adversely affect the study design?

- Sometimes a study may be restricted, ended, or otherwise affected by the IRB in a way that significantly alters the protocol and outcomes of the study.

- Were the statistical methods used appropriate?

- Big subject. Keep learning.

Results

- How closely-matched were the patient populations in the different groups of the study?

- Does this affect the conclusions offered?

- Were all of the relevant confounders accounted for?

- Which important ones were left out and how does that affect the conclusions?

- What did the study find?

- What did it actually find? State it in your words. Usually, it was that the null hypothesis was rejected. That doesn’t necessarily mean that the alternative hypothesis is true.

- Were the findings statistically significant?

- This assumes a lot, not just a p-value <0.05. Was there a power analysis? Did it fit the results? Were appropriate methods used? Were different methodologies used for multiple comparisons analysis? Etc.

- How certain is the measured effect?

- How wide is the confidence interval or other measure of the scatter of the data?

- Were conclusions about subset or secondary outcomes statistically relevant? Were appropriate statistical methods used for them? Was the study powered sufficiently to address all of the secondary outcomes?

- Remember that different statistical methods need to be used for subset analyses and multiple comparisons. Was this done? Why not? Most papers get this part entirely wrong.

- If the data suggests a correlation, is there any evidence of causation?

- Bradford Hill’s Criteria is commonly used to look for evidence of causation:

- Strength (bigger=more likely to be causal)

- Consistency (between different persons doing studies in different places=more strength)

- Specificity (more specific=more likely a causal relationship)

- Temporality (cause→effect)

- Biological gradient (look at how exposure changes incidence)

- Plausibility (is mechanism proposed for how/why they think they got their results?)

- Coherence between epidemiology and laboratory findings

- Experiment

- Analogy

- Bradford Hill’s Criteria is commonly used to look for evidence of causation:

- If an epidemiological study, were any of the seven deadly sins committed?

- Failing to provide the context and definitions of study populations.

- Insufficient attention to evaluation of error.

- Not demonstrating comparisons are like-for-like.

- Either overstatement or understatement of the case for causality.

- Not providing both absolute and relative summary measures.

- In intervention studies, not demonstrating general health benefits.

- Failure to utilize study data to benefit populations.

Discussion

- Were all of the appropriate outcomes addressed?

- Studies tend to focus on one type of outcome, but often unintended harms from the intervention (for example, antimicrobial resistance) is not explicitly studied. Not vaccinating children may reduce the risk of death or injury from the vaccine, but it also increases the overall risk of death (from the disease); you have to look at all relevant outcomes.

- Was a surrogate outcome used?

- Just because a drug lowers cholesterol doesn’t mean it reduces heart disease risks. Or, just because low vitamin D levels are found in patients with fatigue doesn’t mean that giving a patient vitamin D will improve her fatigue.

- What are other alternative hypotheses that fit with the data?

- The fact that the null hypothesis is not likely doesn’t mean that the alternative hypothesis is; and there is almost always more than one alternative that can explain the data.

- Are all of the authors’ conclusions supported by their data?

- Authors like to draw inferences and conclusions that have nothing to do with disproving the null hypothesis; be careful what the take-away message is.

- Are the conclusions novel and how do they add to what we know/do?

- The more novel the conclusion (e.g., disagrees with previous ten trials), the more skeptical one should be.

- Is the outcome studied clinically relevant? What is the magnitude of the effect to the number needed to treat, etc?

- Many things are statistically significant but not clinically significant, either because the problem addressed is so unusual or insubstantial that intervention is inconsequential, or because the effect (NNT) is so large that the intervention may not be worthwhile.

- Is the result of the study broadly applicable, or applicable to your patient population?

- Make sure the patient population is similar to the patient population you would like to apply the data to.

- What do others think about the study?

- An internet or Pubmed search about a particular paper will often reveal important commentary, letters-to-the-editor, and criticism of many published papers that you might not have considered.

Each of these bullet points raises many more questions. Stay tuned.