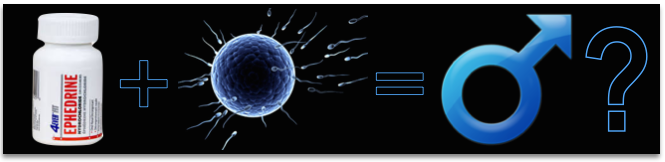

It’s ironic that a journal that’s supposed to combat high blood pressure is doing its best to raise mine with this click bait. A study published this month in the American Journal of Hypertension entitled Maternal Blood Pressure Before Pregnancy and Sex of the Baby: A Prospective Preconception Cohort Study concluded that women with higher preconceptual blood pressures were more likely to have a male child. Give me a break. Before you run off and buy some cold medicine to raise your BP to increase your chances of having a boy baby (I guarantee it will happen), we need to have a little talk.

This is the type of nonsense that fills many medical journals and gets attention from the media. It works its way into textbooks and its novelty gets talked about by the lay press. It leads people to draw silly conclusions in their attempts to understand the information and rationalize it in the world they live in. It is garbage and it shouldn’t be published, at least without comment. But editors and peer reviewers uncritically welcome such nonsense to make money and to get clicks and media coverage which will increase the journal’s impact factor. So far this article is by far the most popular of this month’s issue of the journal and has been picked up by over 40 news outlets. At the time of writing, despite the fact that over 40 news outlets had written about the article, the free PDF of the article had only been downloaded 16 times – two of those were by me and no doubt most of the rest were by the authors. It appears that it was published through the journal’s open access option, which apparently cost the authors $3,650. This isn’t science, it’s business.

So what’s wrong with their ridiculous paper? Let’s go through the five step process I previously outline in How Do I Know if a Study is Valid? and highlight the issues. Recall the steps:

- How good is the study?

- What is the probability that the discovered association is true?

- Rejecting the null hypothesis does not prove the alternate hypothesis (or, What are other hypotheses that explain the data?)

- Is the magnitude of the discovered effect clinically significant?

- What cost is there for adopting the intervention into routine practice?

How good is the study?

This Canadian study was done on women in China (where there is a great interest in selecting male fetuses). Out of 3,375 women, they analyzed data regarding 1,411 women who had preconceptual data collected and went on to deliver a singleton pregnancy. This cohort of women went on to deliver 672 females and 739 males, which skews the data towards an unexpected number of male infants. The investigators measured several independent factors preconceptually that were analyzed to see if they differed between the group having boys and the group having girls: age, years of education, smoking, passive smoking exposure, preexisting hypertension, preexisting diabetes, BMI, waist circumference, systolic blood pressure, diastolic blood pressure, mean arterial blood pressure, total cholesterol, LDL, HDL, triglycerides, and glucose. The blood pressure readings were further divided into quintiles, each of which were analyzed.

After adjusting for factors that the authors thought might otherwise confound hypertension, they found that the systolic blood pressure was an average of 2.7 mm HG higher in the group of women who had male babies and they claimed that this was statistically significant with a P-value of 0.0016. No other comparisons were statistically significant. The rest of the article draws misleading and unsupported conclusions from this data and provides unwarranted speculation that I won’t repeat here. They are even so bold as to make the clickbait claim that a woman with a systolic blood pressure greater than 123 is 1.5 times as likely to have a male as compared to a woman with a lower pressure. Wow. If this seems to smack common sense in the face, there’s a reason.

So what gives? Is this a good study? No. I grow increasingly frustrated by this type of study and there are many problems, but the main problem that the whole house of cards is built upon is the claim that the observed difference in systolic blood pressures (a difference of 2.7 mm Hg) is meaningful just because there is a P-value of less than 0.05. So why don’t I care about this (the P-value after all was 0.0016)?

First, I should tell you that a difference of even 1.3 mm Hg given this number of patients in the trial would have produced a P-value of less than 0.05 (0.0414 to be precise). Does that seem absurd? It is. The expected deviation of automated blood pressure cuffs is about ± 6 mm Hg, so the study was powered to find a difference in blood pressure smaller than the standard deviation of the machine used to measure the blood pressures.

The problem is that the study has no power analysis. P-values can only be interpreted in the context of a power analysis. If a study is over-powered, then even a tiny difference will appear statistically significant, even it if is not actually significant. Let’s say the authors wanted the study to be powered to find a difference of 5 mm Hg between the two groups, if such a difference existed; in this case a N = 90 (rather than 1,411) would have been appropriate. The easiest way to fake statistical significance when there isn’t any is to over-enroll; the higher the N, the lower the P-value. This is what is called P Hacking. Given sufficient N, even a difference of 0.1 mm Hg would be considered significant. These decisions must be made a priori or adjusted for posteriori. They did neither.

Second, the adjustments used for the adjusted odds ratios were selective and artificially limited. They adjusted for age, for example, but they chose not to adjust for exposure to second hand smoke (which could reasonably affect blood pressure) nor did they adjust for average time from enrollment to pregnancy in the study (which was the biggest difference between the two groups, and nearly five weeks different – this difference could have easily reflected a difference in staff or equipment or calibration). Since we don’t have the data, we cannot perform the adjustments ourselves, but it is very reasonable to assume that a complete adjustment may have easily resulted in a loss of the purported statistical significance.

Third, they have a serious multiple comparisons problem. This study tested multiple hypotheses, and at least 22 hypotheses were put forward as preconceptual factors that might have influenced gender determination, ranging from maternal age to triglyceride levels (including the quintiling of blood pressures). We have to make an adjustment to the nominal P-value to adjust for over-enrollment, and we can be very conservative and use a new nominal value of 0.025 as a threshold for significance. If we apply the Bonferroni correction to this, accounting for 22 comparisons, then an adjusted P-value of 0.0011363 becomes the new threshold for significance. Using Sidak’s adjustment, we get a P-value of 0.0011501 as the threshold for significance. By either adjustment, we learn that the claimed P-value of 0.0016 is not statistically significant, and that’s assuming that their P-value is adequately adjusted (it almost certainly is not).

More importantly, if no adjustments at all are made, and the paper is taken as is, then it should be remembered that the chance of finding one or more statistically significant differences when 22 hypotheses are tested in 67.65%. So it was more likely than not that at least one of the 22 probed variables would turn up with a false positive. But this is why the multiple comparisons adjustments must be made; when they are, the claim of significance goes away.

The study has numerous other embarrassing problems, but suffice it to say that an unadjusted blood pressure difference in the two groups of 2.7 mm Hg is not significant.

What is the probability that the discovered association is true?

Usually, I stop with the first question if no significant data is found, but for the sake of playing along, let’s answer this question as well. Recall that this question, which involved Bayesian updating, is based upon first determining what the pretest probability of the hypothesis being tested is. In this case, given all that we know with a high degree of certainty about how sex is determined, what would be a fair estimate that a difference of 5 mm Hg (let alone the observed 2.7 mm Hg) in blood pressure measurements many weeks before conception would affect the eventual sex of the fetus? I think you’re picking up what I’m putting down, so I won’t wax eloquently about this and simply state that this hypothesis undoubtedly, prior to the publication of this study, had less than a 1% chance of being true (probably far, far, far less, but I am generous to a fault). Given this low pre-study probability, even if a high quality, unbiased trial were to find a statistically significant result, it would only change the probability of the hypothesis being true to about 14%; if we introduce even a smidgen of bias to that good trial then the probability drops to only 5%. But, alas, this trial is not good and did not produce a significant result.

What are other hypotheses that explain the data?

Of course, even if the data is real, we must consider what other hypotheses explain the data. Consider this picture:

What’s going on here? Let’s generate some hypotheses: a chameleon climbed up on a towel and changed its colors to blend in; a chameleon climbed up on a towel and the towel changed its colors to match the chameleon; someone who owns a cute chameleon bought a similarly colored towel and placed the animal on it to take a picture that would hopefully go viral; or, perhaps, someone is really good with Photoshop. I’m sure you can think of more. Note that the observed data is compatible with all four theories (and some more I didn’t list).

Just because we might accept that the data is real and valid doesn’t mean that any one particular alternate hypothesis is true. Most people who saw this viral picture assumed that the chameleon had changed its colors to match the towel. But those are the normal colors of the blue bar ambilobe panther chameleon; the owners simply posed the animal with a similar towel. But the point is that the data alone cannot tell you that; you have to generate hypotheses and decide which one is most probable based upon all available data, including data not in the study.

Why else might have the authors of this paper found the observed difference (assuming it is a real difference) besides random chance?

- Different blood pressure machines may have been used (especially since the two arms were enrolled about 5 weeks apart on average).

- Different operators (maybe the person who worked when the male fetus group was more likely to be enrolled placed the cuff differently or liked to chat with the patients more).

- Perhaps someone committed fraud in collecting the data.

- Perhaps someone committed fraud in analyzing the data.

- Perhaps that 5 weeks temporal difference in the two groups reflected a different atmospheric pressure, temperature, environmental toxins or chemicals, or other unknown factors which either independently affected the patients’ blood pressures, the fetuses’ gender differentiation, or both.

We could go on for a while, but you get the point. Knowing nothing else, and raising no question as to the quality of the paper’s data or its methods, we know at the outset that there is a 67.65% chance that the observed difference was due to chance alone, making that the most likely hypothesis. Again, this assumes that there even was a valid finding, and there was not.

Is the magnitude of the discovered effect clinically significant?

No, for three reasons. First, there was no discovered effect. Second, 2.7 mm Hg is well within the margin of error of the measurement device. Third, unless a prospective, randomized, triple blinded, placebo-controlled trial produces a result that says that raising a woman’s blood pressure by about 3 points causes her to be more likely to have a male fetus, then I have no clinical use for this data. Even if such a trial occurred and a result corroborated the magnitude of effect suggested by this paper, I would have to raise the blood pressure of 1,000 women to produce 17 more males, and I doubt that this is something that anyone wants to do (maybe that increased blood pressure will lead to more miscarriages and the net effect is actually less males – oh, the unintended consequences).

I’m not going to deal with the fifth question relating to the cost of adoption of the intervention, because it is difficult to determine cost from fantasy.

I will say that such “science” is dangerous and irresponsible. Why didn’t the editors or peer reviewers point out the multiple problems I have mentioned (and many, many more)? Why was this paper promoted by the journal with press releases to major media outlets? Where is the editorialization that urges caution in drawing any meaningful conclusions from such preliminary work? All of these issues and more are the types of things that I discuss in An Air of Legitimacy, A Hindrance to Progress.

Please don’t take an ephedrine because you want a boy. Oh, and I because I wrote this, the impact factor of this journal will actually go up because I linked to them. Geez.