A lot of folks on both ends of the political spectrum were shocked at the results of the recent national election. Virtually every poll and pundit had not only predicted a fairly easy victory for Hillary Clinton but also had predicted that the Democrats would retake the Senate. Neither happened and, in retrospect, neither outcome was even really that close. A lot of analysis has followed about what went wrong in the prediction business. Were the polls that wrong? Are the formulae that are used to predict outcomes that skewed? This has not been the case in the last two presidential elections. The fact that polls have been so accurate in recent elections made the results of the election, in most people’s eyes, all the more unbelievable. Did people lie to the pollsters? Were folks afraid to say that they were planning to vote for Trump? Did they lie to the exit pollers about their votes? Were the pollsters in the bag for Hillary so much so that they made up data or manipulated the system?

The results of the election were counterintuitive to those leaning left and so the fact that it happened is hard to reconcile. The results were also surprising to many of those leaning right, particularly those who had too much faith in the normally reliable polls. What can we learn from this error of polling science and how does it apply to medical science?

- The pollsters did not lie or distort the polls (and if they did it doesn’t matter).

As I said above, virtually every pollster was wrong, and those who have too much faith in the science behind polling are the ones who were most shocked about the outcome of the election. As a general rule, an increasing number of people have too much faith in science in general (we call these folks Scientismists). Science has become the new religion for many people – mostly college educated adults, and even more so those with advanced degrees. As with many religions, Scientism comes with false dogma, smugness, self-righteousness, and intolerance. Anyone who disagrees with whatever such a person might consider to be science is dismissed as a close-minded bigot. Facts are facts, they will claim, and simply invoking the word ‘science’ should be enough to end any argument.

But this way of thinking is absurdly immature. Those who believe this way have made the dangerous misstep of separating science from philosophy (see below). You cannot understand what a fact even is (or knowledge in general) without understanding epistemology and ontology which are, and forever will be, fields of philosophy. An interpretation of science in the rigid, dogmatic sense that most scientism bigots espouse is incompatible with any reasonable understanding of epistemology and is shockingly dangerous.

History is full of scientific theories that were once widely believed and evidenced but which today have been replaced by new theories based on better data. Talk of geocentrism, for example, just sounds silly today, along with the four bodily humors in medicine or, perhaps, the hollow earth theory. But not too long ago, these ideas were believed with so much certainty that anyone who might dare to disagree with them did so at his own peril.

Now I am not making the science was wrong argument, which is too often used to support non-sensical ideas like the flat earth or other such woo. True science doesn’t make dogmatic and overly-certain assertions. Scientific ‘knowledge’ is usually transitory and true scientists should constantly be seeking to disprove what they believe. Science is not wrong, but, rather, people have been wrong and used the name of science to justify their false beliefs (and in many cases persecute others). Invoking science as an absolute authority is wrong (and dangerous). I would remind you that physicians who believed in the four bodily humors and/or denied the germ theory of disease in the 19th century felt just as certain about their beliefs as you might about, say, genetics. They were making their best guess based on available data, and so do we today; but we should be open to other theories and new data. Don’t be so dogmatic and cocksure.

So the first lesson: If you truly understood science, then you would believe in it far less than you likely do. Facts are usually not facts (they are mostly opinions). It takes a keen sense of philosophy to keep science in its place. But be leery of those who start sentences with words like, “Science has proven…” or “Studies show…”

How does this apply to the polls? Polls are scientific (as much as anything is) but that doesn’t make them free of bias or other errors. The wide variation in polls and the vastly different interpretation of polls is evidence enough that the science of polling is far from exact. More than random error accounts for these differences; there are fundamental disagreements about how to conduct and analyze polls and subjective assumptions about the electorate that vary from one pollster to the next.

A voter poll is not a sample of 1000 average people that reports voter preference for a candidate (say 520 votes for Candidate A versus 480 for Candidate B). We don’t know what the average person is nor can we quantify the average voter. So a pollster collects information about the characteristics of the person being polled (gender, ethnicity, voting history, party affiliation, etc.) and then asks who that person is likely to vote for in the election. The poll might actually record that 700 people plan to vote for Candidate B while 300 plan to vote for Candidate A, but this raw data is normalized according to the categories that the pollster assumes represent the group of likely voters. This raw data is transmuted (and it should be) so that the reported result might be 480 for Candidate A and 520 for Candidate B. But this important process of correcting for over-sampling and under-sampling of various demographics is a key area where bias can have an effect. Assumptions must be made and the pollster’s biases invariably affect these assumptions.

Such is the case for most scientific studies. If you already assume that caffeine causes birth defects, you are more likely to interpret data in a way that comes to this conclusion than if you did not already assume it. Think about this: dozens of very intelligent pollsters and analysts all worked with data that was replicated and resampled over and over again and all came to the wrong conclusion. They had a massive amount of data (compared to the average medical study) and resampled (repeated the experiment) hundreds of times; yet, they were wrong. How good do you really think a single medical study with 200 patients is going to be?

- Science is philosophy, and it is incredibly vulnerable to bias.

Have you ever wondered why it is that someone who does advanced work in mathematics has the same degree as someone who does advanced work in, say, art? They both have Doctorates of Philosophy because they both study subjective fields of human inquiry. Science is philosophy. We all look at things in different ways and our biases skew our sense of objective reality (they don’t skew reality, just our understanding of it). This is true even in fields that seem highly objective, like mathematics. A great example of this is in the field of probability. It really does matter whether or not you are a Bayesian or a Frequentist. Regardless of which philosophy you prefer (did you not know that you had a philosophical leaning?), you have to make certain assumptions about data in order to apply your equations, and here is where our biases rush in to help us make assumptions (not only am I a Bayesian, but I prefer the Jeffreys-Jaynes variety of Bayesianism). How you conduct science depends on your philosophical beliefs about the scientific process; how you analyze and categorize evidence and facts depends on your views of epistemology and ontology.

If two people can look at the same data and draw different conclusions, then you know that bias is present and not being mitigated adequately. We all have bias; but some are more aware of it than others. Only by being aware of it can we begin to mitigate it. We cannot (and shouldn’t try) to get rid of bias. Those who think that they are unbiased are usually the most dangerous folks; they are just unaware of their biases (or don’t care about them) and, in turn, are doing nothing to mitigate them. This is the danger of Scientism. The more you believe that you are right because ‘science’ or ‘facts’ say so, the less you truly know about science and facts and the less you are mitigating your personal biases. Bias is not just about your predisposition to dislike people who are different than you, and perhaps we should use the phrase cognitive disposition to respond as a less derisive term, but bias is shorter and easier to understand. If the word makes you uncomfortable, then good. Bias is easy to understand in the context of journalism and pollsters; most political junkies are aware that over 90% of journalists are left-leaning. Bias is harder for people to understand in the context of medical science, but the impact on outcomes (poll results, study findings, the patient’s diagnosis) is the same.

Thus, while the pollsters and pundits used science to inform their opinions of the data, most were unaware of how they were cognitively affected by their philosophies. In some cases, this means that they used statistical methods that were inappropriate, and in other cases it means that their political philosophy (and their assumptions about how people think and make decisions) misinformed their data interpretation. This happens in every scientific study. The wind is always going to blow, and your sail is your bias. You can’t get rid of your sail, you just have to point it in the best way possible. The boat is going to move regardless (even if you take the sail down). William James, in The Will to Believe, said,

We all, scientists and non-scientists, live on some inclined plane of credulity. The plain tips one way in one man, another way in another; and may he whose plane tips in no way be the first to cast a stone!

- Data is in the eye of the beholder.

The plural of anecdote is not data. ― Marc Bekoff

How we interpret (and report) data affects how we and others synthesize and use it. Does the headline, “College educated whites went for Hillary” fairly represent the fact that Hillary got 51% of the college educated white vote? Maybe a better headline would have been, “Clinton and Trump split the white, college-educated vote.” Even worse, the margin of error for this exit-polling “fact” is enough that the truth may be that the majority of college-educated whites actually preferred Trump in the election. But the rhetorical implications of the various ways of reporting this data are enormous. Reporters and pundits make conscious choices about how to write such headlines.

Would you be surprised to know that Trump got a smaller percentage of the white vote than did Romney and a higher percentage of the Hispanic and African American vote than did Romney? But since fewer whites and non-whites voted in the 2016 election than in the 2012 election, we could choose to report that data by saying, “Trump received fewer black and Latino votes than Romney.” But so did Hillary compared to Obama. Fewer people voted in total. Obviously the point I am making is that how we frame (contextualize) data is immensely important and what context we choose to present for our data is subject to enormous bias.

I have made these points before in Words Matter and Trends… as these issues apply to medicine. Data without context is naked, and we must be painfully aware of how we clothe our data. Which facts we choose to emphasize (and which we tend to ignore) are incredibly important. How facts are spun as positives (or negatives) are ways that we bias others to believe our potentially incorrect beliefs. Science is just as guilty of this faux pas as are politicians and preachers. Have you ever said that a study proves or disproves something, or that a test rules something in or out? If you did, you are guilty of this same mistake. Studies and tests don’t prove things or disprove them, they simply change our level of certainty about a hypothesis. In some cases, when the data is widely variable and inconsistent, or when the test has poor utility (low sensitivity or specificity), a test or study may not change our pre-test probability at all. This was the case with polling data from the 2016 election: our pre-test assumption that a Clinton or Trump victory was equally likely should not have been changed at all given the poor quality of the data that was collected. This is also true of most scientific papers (for more on this concept, please read How Do I Know If A Study Is Valid?).

- The fact that a majority of scientists believe something doesn’t lend the idea any more credibility than if it were the minority position.

I want to pause here and talk about this notion of consensus, and the rise of what has been called consensus science. I regard consensus science as an extremely pernicious development that ought to be stopped cold in its tracks. Historically, the claim of consensus has been the first refuge of scoundrels; it is a way to avoid debate by claiming that the matter is already settled. Whenever you hear the consensus of scientists agrees on something or other, reach for your wallet, because you’re being had. Let’s be clear: the work of science has nothing whatever to do with consensus. Consensus is the business of politics. Science, on the contrary, requires only one investigator who happens to be right, which means that he or she has results that are verifiable by reference to the real world. In science consensus is irrelevant. What is relevant is reproducible results. The greatest scientists in history are great precisely because they broke with the consensus. There is no such thing as consensus science. If it’s consensus, it isn’t science. If it’s science, it isn’t consensus. Period. ― Michael Crichton

I cannot say this better than did Crichton so I won’t try. The consensus of the polls and pundits was wrong. Similarly, scientific consensus has been wrong about thousands of other scientific ideas in our past. Consensus is the calling card of Scientism. Galileo said, “In questions of science, the authority of a thousand is not worth the humble reasoning of a single individual.” Often what we call scientific consensus would be more accurately termed “prevailing bias.” This type of prevailing bias is dangerous when it leads to close-mindedness and bullying.

- Reductionism is dangerous.

Scientific questions like, “Why were the polls so wrong?” or, “What causes heart disease?” are complex and multifactorial. We don’t know all the issues that need to be considered to even begin to answer questions like these. Humans naturally tend to reduce such incredibly complex and convoluted problems down into over-simplified causes so that we can wrap our minds around them. Here’s one such trope from the election: “Whites voted for Trump and non-whites voted for Clinton.” This vast oversimplification of why Trump won and Clinton lost is frustratingly misleading, but if you are predisposed to want to believe that this is the answer, then those words in The Guardian will suffice. Why not report, “People on the coast voted for Clinton and inland folks voted for Trump,” or “People in big cities voted for Clinton and people in smaller cities and rural areas voted for Trump,” or old people versus young people, rich versus poor, bankers versus truck drivers, or violists versus engineers?

It would be wrong to reduce a complex question down to any of these over-simplified explanations, but when you see it done, you can tell the bias of the author. If someone studies dietary factors associated with Alzheimer’s disease, the choice of which factors they chose to study reveals his bias. What’s more, even though all of those issues may be relevant to the problem, when the evidence is presented in such a reductionist and absolute way, we quickly lose perspective of how important the issue truly is. So if only 51% of college-educated whites voted for Clinton, why present that particular fact (out of context and without a measure of the magnitude of effect)? The truth would appear to be that a college education was not really relevant to the election outcome. How much does eating hot dogs increase my chance of GI cancer? I’m not doubting that it does, but if the magnitude of effect is as little as it is, I will continue eating hot dogs and not worry about it.

We are curious and we all want to know the mechanisms of the diseases we treat or why people vote the way they do, etc. Yet, we must be careful not to too quickly fill in details that we don’t really understand. Not everything has to be understood to be known. When we reduce down complex problems, we almost always create misconceptions, and these misconceptions may impede progress and understanding of what is actually a complex issue. One of the my favorite medical examples: meconium aspiration syndrome (MAS). There is a comfort for the physician in thinking of MAS as a disease caused by the aspiration of meconium during birth; but the reality is that the meconium likely has little to nothing to do with the often fatal variety of MAS. We oversimplified a complex problem, but the false idea has been incredibly persistent. We spent decades doing non-evidence based interventions like amnioinfusion or deep suctioning at the perineum because it made sense. It made sense because we reduced a complex problem down to simple elements (that were, as it turns out, wrong or at least incomplete). Other examples include the cholesterol hypothesis of heart disease or the mechanical theories of cervical insufficiency, etc.

- People are bad at saying the words, “I don’t know.”

The quest for absolute certainty is an immature, if not infantile, trait of thinking. ― Herbert Feigl

The truth is that the pollsters’ main sin was over-representing their levels of confidence. Different pollsters looks at the same data in remarkably different ways. Out of 61 tracking polls going into the election, only one (the L.A. Times/USC poll) gave Trump a lead. Those individual polls, it turns out, over-sampled minority votes (or under-sampled white voters, depending upon your bias). Why did this incorrect sampling happen? Bias. Pollsters were over-sold on the narrative of the changing demographics of America. Some have admitted that they felt it was impossible for any Republican to win ever again because of this. So they made sampling assumptions in the polls that were biased to produce that result (I am not saying this was done consciously – they honestly believed that what they were doing was correct).

Here’s the lesson: We make serious mistakes in science and in decision making when we pick the outcome before we collect (or analyze) the data. We must let the facts lead to a conclusion, not our conclusion lead to the facts. This principle is true for every scientific experiment ever conducted. The pollsters really didn’t know what percentage of whites and non-whites would vote in this election (this was much easier in the 2012 election which was rhetorically similar to the 2008 election). But rather than express this uncertainty in reporting the data, the pollsters published confidence intervals and margins of error that were too narrow than the data deserved (and this over-selling of confidence is promoted by a sense of competition with other pollsters, just like scientific investigators in every field seek to one-up their colleagues).

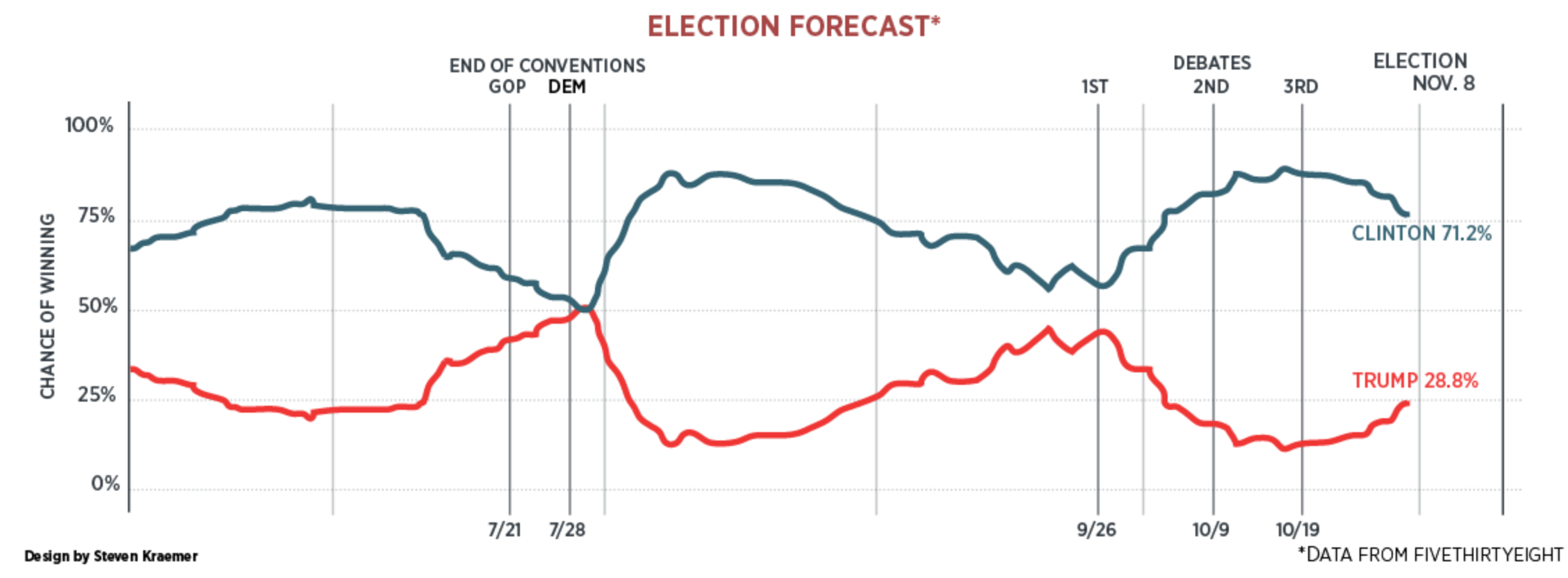

This already biased polling data was then used by outfits like fivethirtyeight.com to run through all the scenarios and make a projection of the likely outcome of the race. A group at Princeton reported a 99% chance that Clinton would win. Fivethirtyeight gave Clinton the lowest pre-election probability of winning at 71.4%. In other words, the algorithms used by fivethirtyeight and Princeton and others looked at basically the same data and, by making different philosophical assumptions, used the same probability science to estimate the chance of the two candidates winning and came up with wildly different predictions from one another (from a low of 71.4% to a high of a 99% chance of a Clinton victory). These models of reality were all vastly different than actual reality (a 0% chance of a Clinton victory).

But if we look closer at both how much the polls changed throughout the season and how much prognosticators changed their predictions throughout the season, then what we note is very wide variation. This amount of variation alone can be statistically tied to the level of uncertainty in the data, which is a product of both the pollsters’ misassumptions about sampling and the pundits’ overconfidence in that data (and some poor statistical methodology). Error begets error. Nassim Taleb, author of Black Swan, Antifragile, and Fooled By Randomness, consistently criticized the statistical methodologies of fivethirtyeight and other outlets throughout the last year. If you are interested in some statistical heavy-lifting, read his excellent analysis here.

What the pundits should have said was, “I don’t know who has the advantage” and that would have been closer to the truth (this was Taleb’s own position). In other words, when we communicate scientific knowledge, it is important to report both the magnitude of effect of the observation and the probability that the “fact” is true (our confidence level). This underscores again the importance of epistemology (and I would argue Bayesian probabilistic reasoning). One of the basic tenets of Bayesian inference is that when no good data is available to estimate the probability of an event, then we just assume that the event is as likely to occur as to not occur (50/50 chance). This intuitive estimate, as it turns out, was much closer to the election outcome than any pundit’s prediction. But we didn’t know what we didn’t know because people are bad at saying the words, “I don’t know.” A side note is that very often our intuition is actually better than shoddy attempts at explaining things “scientifically.” This theme is consistent in Taleb’s writings. For example, most people unexposed to polling results (which tend to bias their opinions) would have stated that they were not sure who would win. That was a better answer than was Nate Silver’s at the fivethirtyeight blog. Intuition (System 1 thinking) is a powerful tool when it is fed with good data and is mitigated for bias (but, yes, that’s the hard part).

Nassim Taleb is less subtle in his criticisms of Scientism and what he calls the IYI class (intellectual yet idiot):

With psychology papers replicating less than 40%, dietary advice reversing after 30 years of fatphobia, macroeconomic analysis working worse than astrology, the appointment of Bernanke who was less than clueless of the risks, and pharmaceutical trials replicating at best only 1/3 of the time, people are perfectly entitled to rely on their own ancestral instinct and listen to their grandmothers (or Montaigne and such filtered classical knowledge) with a better track record than these policymaking goons.

Indeed one can see that these academico-bureaucrats who feel entitled to run our lives aren’t even rigorous, whether in medical statistics or policymaking. They can’t tell science from scientism — in fact in their eyes scientism looks more scientific than real science.

Taleb has written at length about how poor statistical science affects policy-making (mostly in the economic realm). But we see this in medical science as well. Do sequential compression devices used at the time of cesarean delivery reduce the risk of pulmonary embolism? No. But they are almost universally used in hospitals across America and have become “policy.” Such policies are hard to reverse. In medicine, we create de facto standards of care based on low-confidence, poor evidence. Scientific studies should be required to estimate the level of confidence that the hypothesis under consideration is true (and this is completely different than what a P-value tells us), as well as the magnitude of the effect observed or level of importance. Just because something is likely to be true doesn’t mean it’s important.

Consequentialism is a moral hindrance to knowledge.

Science has long been hindered by dogma. Most great innovations come from those who have been unencumbered by the weight of preconceptions. By age 24, Newton had conceptualized gravity and discovered the calculus. Semmelweis had discovered that hand-washing could prevent puerperal sepsis by age 29. Faraday had invented the electric motor by age 24. Thomas Jefferson wrote the Declaration of Independence at age 33. Why so young? Because these men and dozens of others had not already decided the limitations of their knowledge. They did not suffer from the ‘persistence of ideas’ bias. They did not know what was possible (or impossible). They looked at the world differently than their older contemporaries because they had not already learned ‘the way it was.’ Therefore, they had less motivation to skew the data they observed into a prespecified direction or to make the facts fit a narrative. Again, they were more likely to abide by the howardism, We must let the facts lead to a conclusion, not our conclusion lead to the facts.

Much of pesudo-science starts with someone stating, “I know I’m right, now I just have to prove it!” This opens the door to often inextricable bias. I believe this is a form of consequentialism.

Consequentialism is a moral philosophy best explained with the aphorism, “The ends justifies the means.” In various contexts, the idea is that the desired end (which is presumably good) justifies whatever actions are needed to achieve that end along the way. Actions that might normally be considered unethical (at least from a deontological perspective) are justifiable because the end-goal is worth it. Of course, this presumes two things: that the end really is worth it, and that the impact of all the otherwise unethical actions along the way don’t outweigh the supposed good of the outcome. Such pseudo-Machiavellian philosophy is really just an excuse to justify human depravity (Machiavelli never said the ends justifies the means). Put another way, if you can’t get to a good outcome by using moral actions, the outcome is probably not actually good.

What does this have to do with the election and science? Simply this: when a scientist (or pollster, politician, journalist, or you) has predetermined the outcome or the conclusion you believe should be, then all the data along the way is skewed according to this bias. You are subject to overwhelming cognitive bias. You have fallen prey to Jaynes’ mind projection fallacy. Real science lets the data speak for itself. But most science, as practiced, conforms data to fit a predetermined narrative (the narrative fallacy). However, what we think is the right outcome or conclusion, determined before the data is collected, may or may not be correct. True open-mindedness is not having a preconception about what should be, what is possible or impossible, what is or has been or will be, but only a desire to discover truth and knowledge, regardless of where it might lead.

Just as pundits and pollsters were blind to the reality of a looming Trump victory (and practically inconsolable at the surprise that they had been so wrong), so too do medical scientists conduct studies which are designed with enough implicit bias to tweak the data to fit their agendas. Scientists should seek to disprove their hypothesis, not prove it. There is a reason why the triple blinded, placebo-controlled, randomized trial is the gold standard (though not infallible) – to remove bias. The idea that we should seek to disprove what we believe is not just an axiom – it’s foundational to properly conduct experiments. Be on the lookout for people who pick winners first (people or ideas) and then manipulate the game (rules or data) to make it happen.